Artificial intelligence has quietly become one of the most transformative forces reshaping how lawyers work, how courts operate, and how justice itself is being administered. What was once science fiction—machines that can read through thousands of legal documents in seconds, predict how judges will rule, or draft complex contracts- is now everyday reality in law firms across the globe. But this transformation brings enormous opportunity alongside genuine challenges that the legal profession is still learning to navigate.

If you’re curious about how artificial intelligence and law intersect, you’ve landed in the right place. This comprehensive guide explores the landscape of artificial intelligence and law, examining both the revolutionary possibilities and the serious questions we must address. Whether you’re a law student, a practicing attorney, a business leader, or simply someone interested in how technology is reshaping institutions, understanding artificial intelligence and law is increasingly essential.

Table of Contents

What is Artificial Intelligence and Law, and Why Does It Matter?

Artificial intelligence and law refers to the application of AI technologies—including machine learning, natural language processing, and predictive analytics—to legal tasks and legal decision-making. The connection between artificial intelligence and law isn’t theoretical anymore; it’s operational in hundreds of firms and courts worldwide.

To understand why artificial intelligence and law matters, consider this: lawyers traditionally spend enormous amounts of time on repetitive tasks. A corporate attorney might spend weeks reviewing thousands of contract pages to identify key clauses, obligations, and risks. With artificial intelligence and law applications, that same task can be completed in hours—or even minutes. The efficiency gains are staggering, but the implications run far deeper than just saving time.

The importance of artificial intelligence and law also lies in access to justice. Legal services are expensive, and many people cannot afford them. If artificial intelligence and law can reduce the cost of basic legal work, millions of people might finally get access to legal help they currently cannot afford. That’s not just about convenience; it’s about fundamental fairness in society.

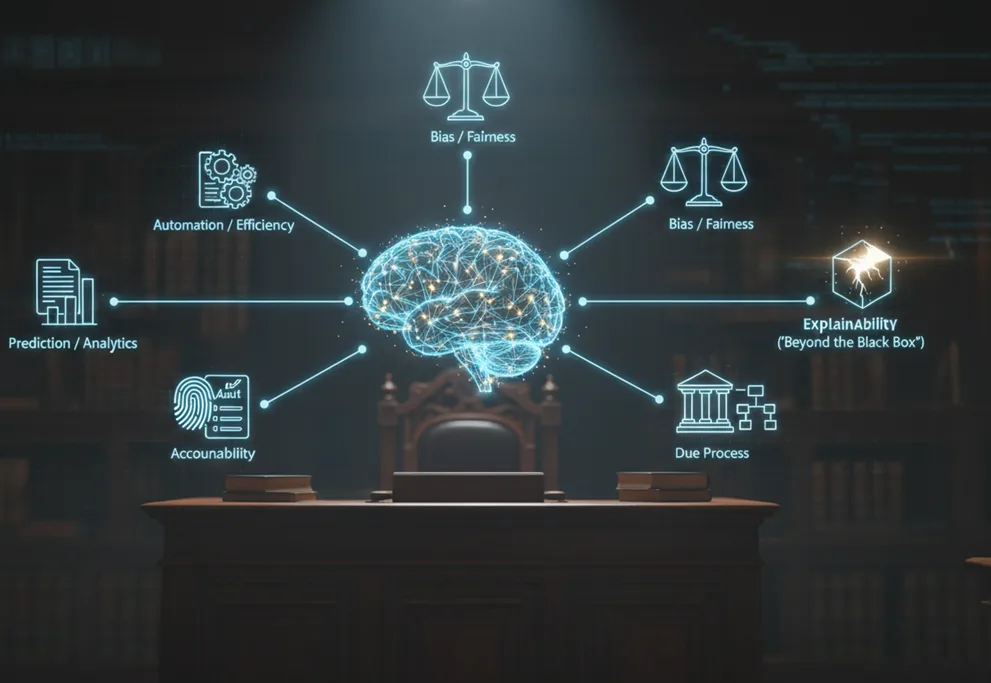

Key Applications of Artificial Intelligence in Legal Practice

The real-world applications of artificial intelligence and law are already reshaping how legal work gets done. Here’s what’s happening right now in law firms and courts:

Legal Research: Finding Needles in Digital Haystacks

Traditionally, legal research meant going through law libraries or databases like Westlaw and LexisNexis, typing in keywords, and hoping you found all the relevant cases. This was time-consuming and imperfect. Lawyers could miss critical precedents simply because they didn’t think of the right search terms.

Artificial intelligence and law have revolutionized this process through natural language processing (NLP). AI-powered legal research tools like Lexis+ AI and Westlaw Precision now understand the meaning of your legal question, not just the keywords. Ask an AI system about “wrongful termination based on age discrimination,” and it will find cases involving age discrimination even if they use different terminology. The system understands that these cases are legally relevant to your question. This semantic search capability means lawyers can find more relevant authority faster and with greater confidence that they haven’t missed important precedents.

Contract Analysis and Document Review

Contract review is one of the most tedious aspects of legal practice. In a large corporate deal, a legal team might need to review hundreds or thousands of pages of contracts. Every clause matters. Missing a single problematic provision could expose a company to millions of dollars in liability. Yet human reviewers get tired, their attention wanes, and mistakes happen.

Artificial intelligence and law applied to contract review uses machine learning to extract key information from contracts—parties, dates, payment terms, renewal clauses, liability limitations, and more. AI systems can compare contracts against a company’s standard template and instantly flag deviations. They can identify risks that don’t match a company’s policies. They can spot inconsistencies across a portfolio of contracts. What previously took weeks of human labor can now be done in days or hours with greater accuracy.

The impact on due diligence during mergers and acquisitions has been particularly profound. By accelerating contract review, artificial intelligence and law has reduced transaction costs and timelines significantly.

Predictive Analytics and Case Outcome Forecasting

Perhaps the most striking application of artificial intelligence and law involves predicting how cases will unfold. Modern AI systems analyze millions of historical court decisions to identify patterns. They track which judges tend to grant certain motions, which types of arguments succeed in particular jurisdictions, how long cases typically take, and what settlement ranges are common for different case types.

These predictive tools can forecast with 85% accuracy whether a judge will grant a motion to dismiss. They can estimate the likelihood that a defendant will appeal. They can predict settlement ranges based on comparable cases. For lawyers, this means better strategic planning. You can enter facts from your case and see how similar cases have been decided. You can understand your judge’s decision-making patterns. You can make informed decisions about whether to settle, pursue summary judgment, or take a case to trial.

This application of artificial intelligence and law has profound implications. It democratizes legal expertise by giving attorneys without extensive experience the ability to access predictive insights usually available only to senior partners who have handled hundreds of similar cases.

Judicial Decision Support

Courts themselves are beginning to use artificial intelligence and law tools. Some judges use AI systems to help with legal research, similar to lawyers. Some jurisdictions are exploring AI for case management and scheduling. A few courts have experimented with AI for predictive purposes, such as assessing bail or sentencing risk.

This application of artificial intelligence and law is particularly controversial because it involves fundamental rights and liberty. When an AI system helps decide whether someone gets bail, the stakes are impossibly high. Any bias embedded in the system becomes a civil rights issue. This is why artificial intelligence and law in judicial contexts requires the most careful oversight and ethical consideration.

The Revolutionary Benefits of Artificial Intelligence in Legal Practice

When artificial intelligence and law are deployed thoughtfully, the benefits are genuinely transformative:

Cost Reduction for Clients: Legal services are expensive primarily because they’re labor-intensive. When artificial intelligence and law automates routine work, clients pay less. This is already happening. Document review that cost $100,000 can now cost $10,000 with AI doing the heavy lifting. This makes legal services accessible to clients who previously couldn’t afford them.

Enhanced Accuracy: Human reviewers miss things. They get tired. They make typos. They overlook clauses buried in dense legal prose. Artificial intelligence and law systems don’t get tired. They apply the same careful analysis to the first document as the thousandth. Studies show that AI-assisted document review catches risks and problems that human reviewers miss.

Competitive Advantage: Law firms that effectively adopt artificial intelligence and law tools can deliver better work faster and cheaper than competitors. This creates a strong market incentive for adoption. Firms that don’t embrace artificial intelligence and law may struggle to remain competitive as their costs stay high while other firms become more efficient.

Time Freedom for Higher-Value Work: When artificial intelligence and law handles routine research and document review, lawyers are freed to do what they do best—strategic thinking, client counseling, negotiation, and advocacy. Junior lawyers can move faster through early career stages. Senior lawyers can focus on the matters that require human judgment and creativity.

Better Resource Allocation: Law firms can use artificial intelligence and law to forecast demand for different practice areas, predict which cases will require extensive work, and allocate lawyers more efficiently. This means clients get better services and law firms operate more profitably.

Serious Ethical and Legal Challenges with Artificial Intelligence in Law

But artificial intelligence and law is not a panacea. The technology raises profound ethical and legal concerns that the profession is still working to address:

Algorithmic Bias and Discrimination

This is perhaps the most serious concern with artificial intelligence and law. AI systems learn from historical data. If that historical data contains biases, the AI system will perpetuate those biases—often in amplified form.

For example, if an AI system is trained on criminal justice data where certain racial groups have historically received harsher sentences, the system might recommend harsher sentences for defendants from those groups. This isn’t because anyone programmed in racism; it’s because the system learned from biased historical patterns. This application of artificial intelligence and law to criminal justice raises urgent civil rights questions.

Similarly, if a law firm uses an AI system to predict which attorneys are likely to succeed on cases, and that system is trained on historical success data where women and minorities were underrepresented, the AI might systematically recommend against hiring or promoting women and minorities. This would be employment discrimination perpetuated through artificial intelligence and law.

Addressing bias in artificial intelligence and law requires constant vigilance. It means regularly auditing AI systems for disparate impact. It means using diverse training data. It means having humans review AI recommendations, especially in high-stakes contexts. It means being transparent about AI limitations.

Hallucinations and Accuracy Problems

Generative AI systems like ChatGPT sometimes generate plausible-sounding but completely fabricated information. In legal work, this is catastrophic. Imagine an attorney submits a brief citing a case that the AI invented. This has actually happened. In 2023, two New York lawyers were fined for submitting a brief with fictional case citations generated by ChatGPT. A South African case in 2025 involved lawyers citing cases that never existed, citing cases generated by AI.

This particular challenge with artificial intelligence and law is especially concerning because the generated content sounds authoritative. It includes proper legal citations and formatting. A lawyer who doesn’t carefully verify every source can easily incorporate false information. This is why artificial intelligence and law requires rigorous human verification, especially for case citations.

Privacy and Confidentiality Risks

When lawyers use artificial intelligence and law tools, especially cloud-based systems, they may inadvertently expose client information. Client communications often contain sensitive business secrets, personal information, or other confidential details. If that information is fed into an AI system operated by a third party, there’s risk of exposure.

The ethical rules governing lawyers in most jurisdictions require lawyers to protect client confidentiality. If a lawyer uses artificial intelligence and law without adequate safeguards, the lawyer may be violating those ethical duties. Some lawyers have avoided certain AI tools because they can’t guarantee the necessary data protection. This creates a tension: artificial intelligence and law can make you more efficient, but only if you can do so without compromising client confidentiality.

Transparency and Explainability Issues

Many AI systems, particularly deep learning models, operate as “black boxes.” You can feed data in and get recommendations out, but it’s difficult or impossible to explain why the system made a particular recommendation. In legal contexts, this is problematic.

Imagine a lawyer relies on an AI system to predict case outcomes and recommends that the client settle rather than go to trial. If the client asks “why do you think we should settle?”, the lawyer can’t really explain it because the AI can’t explain its reasoning. This undermines the lawyer’s ability to provide competent counsel. Similarly, judges and the public need to understand how artificial intelligence and law systems reach conclusions, especially in high-stakes matters.

Addressing the transparency problem with artificial intelligence and law requires developing more explainable AI models and ensuring that humans always verify and can explain AI recommendations.

Liability and Accountability Gaps

When artificial intelligence and law produces an error or causes harm, who is responsible? Is it the lawyer who used the system? Is it the company that developed the system? Is it the person who trained the system? The answer is still legally murky, and this uncertainty creates problems.

Lawyers are responsible for competent representation. If a lawyer’s negligent use of artificial intelligence and law causes harm to a client, the lawyer is liable. But what if the AI system itself is at fault? Can the client sue the AI company? The law is still catching up to artificial intelligence and law, and these liability questions remain unresolved in many jurisdictions.

Intellectual Property Questions

Artificial intelligence and law systems are often trained on vast amounts of copyrighted material—legal opinions, law review articles, case law, etc. Does using this material to train AI constitute fair use? When an AI system generates legal writing based on this training, who owns that writing? These IP questions remain unsettled and are likely to generate significant litigation.

The Regulatory Landscape: How Governments Are Approaching Artificial Intelligence and Law

Different countries are taking different approaches to regulating artificial intelligence and law:

The European Union’s Risk-Based Approach

The EU’s AI Act takes a risk-based approach. AI systems are categorized based on the risks they pose. High-risk systems—those that could significantly impact people’s rights or safety—face stricter regulation, including transparency requirements, human oversight mandates, and testing before deployment.

This approach acknowledges that artificial intelligence and law in high-stakes contexts (like criminal justice or employment decisions) requires more oversight than lower-risk applications (like legal research).

The United States’ Fragmented Approach

The United States lacks a comprehensive AI regulatory framework. Instead, regulation is fragmented across different sectors and laws. The SEC regulates AI used in financial services. The FTC enforces against AI systems that engage in deceptive practices. Various states have enacted privacy laws that constrain AI applications.

This fragmented approach means that artificial intelligence and law regulation varies depending on context, which creates compliance challenges for firms operating across multiple jurisdictions.

India’s Emerging Framework

India is developing its own approach to artificial intelligence and law regulation through NITI Aayog’s Responsible AI framework. India’s strategy emphasizes “AI for All”- ensuring that AI benefits reach all segments of society while protecting fundamental rights. The framework addresses ethical considerations, accountability mechanisms, and the need for regulatory safeguards.

Given India’s role as a major player in legal services and legal technology, India’s approach to artificial intelligence and law governance will influence global development of the field.

Professional Responsibility and Ethical Obligations

Lawyers have professional responsibilities that constrain how they can use artificial intelligence and law:

Competence: Lawyers must understand the capabilities and limitations of artificial intelligence and law tools they use. Using AI recklessly without understanding how it works violates the duty of competence.

Confidentiality: Lawyers must protect client confidentiality when using artificial intelligence and law. This often means avoiding public cloud-based AI systems or using only AI platforms that provide adequate security and data protection.

Supervision: When artificial intelligence and law is used in a law firm, lawyers must supervise the use and remain responsible for the AI’s outputs. You can’t just delegate to AI and wash your hands of responsibility.

Candor to Courts: When lawyers use artificial intelligence and law to prepare documents filed with courts, they must verify all information and citations. Courts have sanctioned lawyers for submitting AI-generated briefs with fabricated case citations.

Client Communication: Lawyers should generally inform clients when artificial intelligence and law is being used in their matter and should discuss any associated risks.

The Future of Artificial Intelligence in Legal Practice

Where is artificial intelligence and law heading? Several trends seem likely:

Greater Adoption: More firms will adopt artificial intelligence and law tools as the technology improves and more sophisticated legal-specific tools become available. Firms that don’t embrace artificial intelligence and law will struggle to compete on cost and efficiency.

Specialization: Artificial intelligence and law tools will become increasingly specialized for particular practice areas. Patent attorneys might use AI systems trained specifically on patent law. Immigration attorneys might use AI systems trained on immigration matters. This specialization will improve accuracy and relevance.

Regulation and Standards: Governments will likely develop more comprehensive regulation of artificial intelligence and law. Bar associations will likely develop specific ethical guidelines for AI use. Standards for explainability and accuracy will likely emerge.

Job Transformation: Artificial intelligence and law will likely transform legal careers. Some entry-level work (like basic legal research and document review) may be automated away, but new roles will emerge managing AI systems and handling work that requires distinctly human judgment.

Judicial Integration: Courts will likely integrate artificial intelligence and law more extensively for legal research, case management, and possibly even decision support. This will raise important questions about transparency and fairness.

Frequently Asked Questions About Artificial Intelligence and Law

Q: Can AI replace lawyers?

A: No, but it will transform lawyering. AI is excellent at routine analytical tasks like legal research and contract review. But law requires human judgment, negotiation, strategic thinking, and client counseling. For the foreseeable future, artificial intelligence and law will augment what lawyers do rather than replace them. That said, the nature of legal work will change as routine tasks become automated.

Q: Is it safe to use ChatGPT for legal work?

A: ChatGPT and similar general-purpose AI tools pose risks for legal work. They’re prone to hallucinations (fabricating case citations), they may expose confidential information, and they weren’t trained specifically on legal material. Specialized legal AI tools like Lexis+ AI or Westlaw Precision are safer because they’re grounded in authoritative legal sources and include proper citations. However, even with specialized tools, lawyers must verify all outputs.

Q: How do I know if an AI legal tool is trustworthy?

A: Look for tools that: (1) are transparent about how they work; (2) provide citations and explain their reasoning; (3) are trained on authoritative legal sources; (4) have been tested and validated for accuracy; (5) include strong data security and confidentiality protections; (6) have been adopted by reputable law firms; and (7) are compliant with relevant ethical rules and regulations.

Q: What should I do if I find an error in AI-generated legal work?

A: First, immediately disclose the error to your client and take corrective action. Second, report the error to the AI vendor so they can improve their system. Third, consider whether the error resulted from your negligent use of artificial intelligence and law or a flaw in the system itself. The error may create liability, so document what happened. Finally, use the error as a learning opportunity to improve your verification procedures.

Q: Are law schools teaching AI?

A: Increasingly, yes. Law schools are adding courses on legal technology and artificial intelligence and law. However, integration is still uneven. Some schools have comprehensive curricula on AI and law; others have limited offerings. This is an area where legal education is actively evolving to prepare students for an AI-transformed legal profession.

Q: What countries are leading on AI and law regulation?

A: The European Union has taken a leading role with its AI Act. The UK, Canada, and other Commonwealth nations are developing frameworks. India is working on a comprehensive approach. The United States has taken a more hands-off, sectoral approach. China has been more cautious about AI applications to sensitive domains. There’s no global consensus yet, so international fragmentation remains a challenge.

Q: How does artificial intelligence and law affect access to justice?

A: Potentially very positively. If artificial intelligence and law reduces the cost of legal services, more people can afford legal help. AI can make legal research more efficient and accurate. AI can help automate routine legal tasks that consume time and money. However, access to quality AI systems may itself become a barrier—wealthy defendants and corporations can afford sophisticated AI tools while poor defendants and individuals cannot. This could exacerbate inequality unless access is carefully managed.

Q: What’s the biggest risk with artificial intelligence and law?

A: Probably algorithmic bias. If AI systems perpetuate or amplify biases in legal decision-making, the result could be systematic unfairness affecting millions of people. Unlike traditional bias, which occurs at individual moments, algorithmic bias is systematic and scalable. It’s the difference between one biased judge and a biased algorithm affecting decisions nationwide.

Conclusion: Navigating the Future of Artificial Intelligence and Law

Artificial intelligence and law represents one of the most significant developments in legal practice since the advent of computers themselves. The technology offers genuine benefits—greater efficiency, reduced costs, enhanced accuracy, and improved access to justice. But it also raises genuine risks—bias, accuracy problems, privacy violations, and fundamental questions about fairness and accountability.

The legal profession is in the early stages of learning how to use artificial intelligence and law responsibly. Lawyers are developing best practices. Bar associations are issuing ethical guidance. Regulators are beginning to develop frameworks. Researchers are working to improve AI accuracy and explainability.

If you’re a law student, your generation will practice law differently than previous generations because of artificial intelligence and law. You’ll need to understand how AI works, what it can and cannot do, and how to use it ethically and effectively. This is now a core competency for legal practice.

If you’re a practicing attorney, the time to understand artificial intelligence and law is now. Clients are expecting it. Courts are seeing it in briefs. Your competitors are using it. By understanding both the promise and the perils of artificial intelligence and law, you can use the technology strategically to serve your clients better while avoiding the ethical and practical pitfalls.

If you’re a client, understanding artificial intelligence and law helps you ask the right questions about how your legal team is working. It helps you understand whether they’re using appropriate tools and whether they’re applying adequate human judgment and oversight.

The future of artificial intelligence and law is not predetermined. It will be shaped by choices we make now- choices about how to regulate the technology, how to address bias, how to ensure transparency, and how to keep humans in the loop where human judgment matters most. These are challenging choices, but they’re the choices that our time demands.

About Author

Harry Surden is a professor of law at the University of Colorado Law School whose work focuses on the intersection of artificial intelligence and law. A former professional software engineer, he brings a rare combination of technical and legal expertise to questions about how machine learning, large language models, and automation are reshaping legal systems. He is widely recognized for originating the influential concept of “computable contracts” and for landmark articles such as “Artificial Intelligence and Law: An Overview” and “Machine Learning and Law.”

Surden also serves as Associate Director (and affiliated faculty) at Stanford University’s CodeX Center for Legal Informatics, where he helped pioneer research at the frontier of legal informatics and AI. His teaching and scholarship span AI and law, intellectual property, information privacy, and emerging technologies, and his work is frequently cited by academics, practitioners, and policymakers worldwide.